SEO Services Company: For SEO Services in India, SEO Company in India, Link Building Services in India, Google SEO Services in India, visit http://seo-servicescompany.blogspot.com.

Monday, May 31, 2010

SEO Tools

Generating a robot file with this tool is ideal if you wish to block certain directories or files from search engines. To use generator tool, enter required information below & click button. You will n be shown text for file. Copy this to a file called robots.txt & place on root of your website.

Robots.txt is a plain text file which is present at root of a server. This file comm&s search bots that which content to be permitted or forbade when it imposes ir website. When your Flash text is similar to your HTML version n you could easily set Robots.txt file to dismiss SWF content & only to allow HTML content towards search bots.

Often, new website builders & owners tend to forget importance of site necessities like a simple sitemap. A Sitemap is often seen as pointless since it is just replicating links already on website. However, if you construct a good sitemap that highlights important sections of your site n you can customize it to match your own needs. At very least you should be using Google Analytics & Webmaster Tools to keep track of your site’s visitors. You should submit your sitemap to Webmaster Tools & make sure you install correct robot.txt file to allow Google to scroll your site & index it.

re is a new Webmaster tool available that acts as a translator or robot.txt files. It helps you build file to use, & all you have to do is enter areas you do not want robots to crawl through. You can also make it very specific blocking only certain types of robots from certain types of files. After you use generator tool, you can take it for a test drive by using analysis tool. After you have seen that your test file is ready to go, you can simply save new file on root directory on your website & sit back.

Sunday, May 16, 2010

black hat seo

Black Hat search engine optimization is customarily defined as techniques that are used to get higher search rankings in an unethical manner. se black hat SEO techniques usually include one or more of following characteristics:

1. Breaks search engine rules & regulations

Creates a poor user experience directly because of black hat SEO techniques utilized on Web site

Unethically presents content in a different visual or non-visual way to search engine spiders & search engine users.

A lot of what is known as black hat SEO actually used to be legit, but some folks went a bit overboard & now se techniques are frowned upon by general SEO community at large. se black hat SEO practices will actually provide short-term gains in terms of rankings, but if you are discovered utilizing se spammy techniques on your Web site, you run risk of being penalized by search engines. Black hat SEO basically is a short-sighted solution to a long-term problem, which is creating a Web site that provides both a great user experience & all that goes with that.

Black Hat SEO Techniques To Avoid

• Keyword stuffing: Packing long lists of keywords & nothing else onto your site will get you penalized eventually by search engines. Learn how to find & place keywords & phrases right way on your Web site with my article titled Learn Where & How To Put Keywords In Your Site Pages.

•

• Invisible text: This is putting lists of keywords in white text on a white background in hopes of attracting more search engine spiders. Again, not a good way to attract searchers or search engine crawlers.

•

• Doorway Pages: A doorway page is basically a “fake” page that user will never see. It is purely for search engine spiders, & attempts to trick m into indexing site higher. Read more about doorway pages.

Friday, May 7, 2010

search engine optimization techniques

A basic tool that all SEO experts tend to use is Google Keyword Tool which is free. re is or to help you determine what it is, that people are looking to buy. re are keyword rank checkers that give you valuable insight on how your content is ranked by search engines. Or tools are keyword suggestion tools but Google provides this technically in its Google Keyword Tool for AdWords. re are also link analysis tools to determine which links are bringing you most traffic that automate what takes hours of research in this area.

Search engine optimization in a very important part of internet marketing as it helps you get more visitors to your site. re are many ways to optimize your site & link building happens to be one of m, link building Is about placing links on or sites to promote yours. If sites have lots of inbound links search engine ranks site. Link building can also effectively popularize your site as a lot of people would come to know of your site when y see se links.

Link building is a very important aspect of SEO. It is performed in order to get site a higher rank on search engines. link building activity includes creation of inbound & outbound links. se links help in bringing more visitors to your sites. This way you can make more sales & also make your site more popular.

Marketing Web Design

To construct a great content-rich website takes planning. A competent designer uses online architectural design principles, carefully combining information delivery & intuitive navigation to satisfy visitor’s needs (why y visited) while accomplishing ir own business goals for fame or fortune (why website was created). Having a proper architecture is vital to online internet marketing of a site that will attract & also satisfy visitors. Simply put, designing an award winning website is not enough. site needs to be placed in front of potential visitors or y will not know you exist. It is integration of web design, development, & internet marketing with focused traffic-generation tactics that is vital to success of your site. Without smart marketing any great website will fail. Many focuses specifically on how to succeed at internet marketing through website design, search engine placement, search engine marketing, submission, analytics, email, & br&ing programs. We help you achieve high traffic to your website that converts to revenue.

Saturday, May 1, 2010

Matt Cutts

Matt Cutts joined Google as a Software Engineer in January 2000. Before Google, he was working on his Ph.D. in computer graphics at the University of North Carolina at Chapel Hill. He has an M.S. from UNC-Chapel Hill, and B.S. degrees in both mathematics and computer science from the University of Kentucky.

Matt wrote SafeSearch, which is Google's family filter. In addition to his experience at Google, Matt held a top-secret clearance while working for the Department of Defense, and he's also worked at a game engine company. He claims that Google is the most fun by far.

Matt currently heads up the Webspam team for Google. Matt talks about webmaster-related issues on his blog.

Interview Transcript

Eric Enge: Let's talk about different kinds of link encoding that people do, such as links that go through JavaScript or some sort of redirect to link to someone, yet the link actually does represent an endorsement. Can you say anything about the scenarios in which the link is actually still recognized as a link?

Matt Cutts: A direct link is always the simplest, so if you can manage to do a direct link that's always very helpful. There was an interesting proposal recently by somebody who works on FireFox or for Mozilla I think, which was the idea of a ping attribute, where the link can still be direct, but the ping could be used for tracking purposes. So, something like that could certainly be promising, because it lets you keep the direct nature of a link while still sending a signal to someone. In general, Google does a relatively good job of following the 301s, and 302s, and even Meta Refreshes and JavaScript. Typically what we don't do would be to follow a chain of redirects that goes through a robots.txt that is itself forbidden.

Eric Enge: Right.

Matt Cutts: I think in many cases we can calculate the proper or appropriate amount of PageRank, or Link Juice, or whatever you want to call it, that should flow through such links.

Eric Enge: Right. So, you do try to track that and provide credit.

Matt Cutts: Yes.

Eric Enge: Right. Let's talk a bit about the various uses of NoIndex, NoFollow, and Robots.txt. They all have their own little differences to them. Let's review these with respect to 3 things: (1) whether it stops the passing of link juice; (2) whether or not the page it still crawled; and: (3) whether or not it keeps the affected page out of the index.

Matt Cutts: I will start with robots.txt, because that's the fundamental method of putting up an electronic no trespassing sign that people have used since 1996. Robots.txt is interesting, because you can easily tell any search engine to not crawl a particular directory, or even a page, and many search engines support variants such as wildcards, so you can say don't crawl *.gif, and we won't crawl any GIFs for our image crawl.

We even have additional standards such as Sitemap Support, so you can say here's a link to where my Sitemap is can be found. I believe the only robots.txt extension in common use that Google doesn't support is the crawl-delay. And, the reason that Google doesn't support crawl-delay is because way too many people accidentally mess it up. For example, they set crawl-delay to a hundred thousand, and, that means you get to crawl one page every other day or something like that.

We have even seen people who set a crawl-delay such that we'd only be allowed to crawl one page per month. What we have done instead is provide throttling ability within Webmaster Central, but crawl-delay is the inverse; its saying crawl me once every "n" seconds. In fact what you really want is host-load, which lets you define how many Googlebots are allowed to crawl your site at once. So, a host-load of two would mean, 2 Googlebots are allowed to be crawling the site at once.

Now, robots.txt says you are not allowed to crawl a page, and Google therefore does not crawl pages that are forbidden in robots.txt. However, they can accrue PageRank, and they can be returned in our search results.

In the early days, lots of very popular websites didn't want to be crawled at all. For example, eBay and the New York Times did not allow any search engine, or at least not Google to crawl any pages from it. The Library of Congress had various sections that said you are not allowed to crawl with a search engine. And so, when someone came to Google and they typed in eBay, and we haven't crawled eBay, and we couldn't return eBay, we looked kind of suboptimal. So, the compromise that we decided to come up with was, we wouldn't crawl you from robots.txt, but we could return that URL reference that we saw.

Eric Enge: Based on the links from other sites to those pages.

Matt Cutts: Exactly. So, we would return the un-crawled reference to eBay.

Eric Enge: The classic way that shows it you just list the URL, no description, and that would be the entry that you see in the index, right?

Matt Cutts: Exactly. The funny thing is that we could sometimes rely on the ODP description (Editor: also known as DMOZ). And so, even without crawling, we could return a reference that looked so good that people thought we crawled it, and so that caused a little bit of earlier confusion. So, robots.txt was one of the most long standing standards. Whereas for Google, NoIndex means we won't even show it in our search results.

So, with robots.txt for good reasons we've shown the reference even if we can't crawl it, whereas if we crawl a page and find a Meta tag that says NoIndex, we won't even return that page. For better or for worse that's the decision that we've made. I believe Yahoo and Microsoft might handle NoIndex slightly differently which is little unfortunate, but everybody gets to choose how they want to handle different tags.

Eric Enge: Can a NoIndex page accumulate PageRank?

Matt Cutts: A NoIndex page can accumulate PageRank, because the links are still followed outwards from a NoIndex page.

Eric Enge: So, it can accumulate and pass PageRank.

Matt Cutts: Right, and it will still accumulate PageRank, but it won't be showing in our Index. So, I wouldn't make a NoIndex page that itself is a dead end. You can make a NoIndex page that has links to lots of other pages.

For example you might want to have a master Sitemap page and for whatever reason NoIndex that, but then have links to all your sub Sitemaps.

Eric Enge: Another example is if you have pages on a site with content that from a user point of view you recognize that it's valuable to have the page, but you feel that is too duplicative of content on another page on the site

That page might still get links, but you don't want it in the Index and you want the crawler to follow the paths into the rest of the site.

Matt Cutts: That's right. Another good example is, maybe you have a login page, and everybody ends up linking to that login page. That provides very little content value, so you could NoIndex that page, but then the outgoing links would still have PageRank.

Now, if you want to you can also add a NoFollow metatag, and that will say don't show this page at all in Google's Index, and don't follow any outgoing links, and no PageRank flows from that page. We really think of these things as trying to provide as many opportunities as possible to sculpt where you want your PageRank to flow, or where you want Googlebot to spend more time and attention.

Eric Enge: Does the NoFollow metatag imply a NoIndex on a page?

Matt Cutts: No. The NoIndex and NoFollow metatags are independent. The NoIndex metatag, for Google at least, means don't show this page in Google's index. The NoFollow metatag means don't follow the outgoing links on this entire page.

Eric Enge: How about page A links to page B, and page A has a NoFollow metatag, or the link to page B has a NoFollow on the link. Will page B still be crawled?

Matt Cutts: It won't be crawled because of the links found on page A. But if some other page on the web links to page B, then we might discover page B via those other links.

Eric Enge: Right. So there are two levels of NoFollow. There is the attribute on a link, and then there is the metatag, right.

Matt Cutts: Exactly.

Eric Enge: What we've been doing is working with clients and telling them to take pages like their about us page, and their contact us page, and link to them from the home page normally, without a NoFollow attribute, and then link to them using NoFollow from every other page. It's just a way of lowering the amount of link juice they get. These types of pages are usually the highest PageRank pages on the site, and they are not doing anything for you in terms of search traffic.

Matt Cutts: Absolutely. So, we really conceive of NoFollow as a pretty general mechanism. The name, NoFollow, is meant to mirror the fact that it's also a metatag. As a metatag NoFollow means don't crawl any links from this entire page.

NoFollow as an individual link attribute means don't follow this particular link, and so it really just extends that granularity down to the link level.

We did an interview with Rand Fishkin over at SEOmoz where we talked about the fact that NoFollow was a perfectly acceptable tool to use in addition to robots.txt. NoIndex and NoFollow as a metatag can change how Googlebot crawls your site. It's important to realize that typically these things are more of a second order effect. What matters the most is to have a great site and to make sure that people know about it, but, once you have a certain amount of PageRank, these tools let you choose how to develop PageRank amongst your pages.

Eric Enge: Right. Another example scenario might be if you have a site and discover that you have a massive duplicate content problem. A lot of people discover that because something bad happened. They want to act very promptly, so they might NoIndex those pages, because that will get it out of the index removing the duplicate content. Then, after it's out of the index, you can either just leave in the NoIndex, or you can go back to robots.txt to prevent the pages from being crawled. Does that make sense in terms of thinking about it?

Matt Cutts: That's at the level where I'd encourage people to try experiments and see what works best for them, because we do provide a lot of ways to remove content.

Matt Cutts: There's robots.txt.

Eric Enge: Sure. You can also use the URL removal tool too.

Matt Cutts: The URL removal tool is another way to do it. Typically, what I would probably recommend most people do, instead of going the NoIndex route, is to make sure that all their links point to the version of the page that they think is the most important. So, if they have got two copies, you can look at the back links within our Webmaster Central, or use Yahoo, or any other tools to explore it, and say what are the back links to this particular page, why would this page be showing up as a duplicate of this other page? All the back links that are on your own page are very easy to switch over to the preferred page. So, that's a very short term thing that you can do, and that only usually takes a few days to go into effect. Of course, if it's some really deep URL, they could certainly try the experiment with NoIndex. I would probably lean toward using optimum routing of links as the first line of defense, and then if that doesn't solve it, look at or consider using NoIndex.

Eric Enge: Let's talk about non-link based algorithms. What are some of the things that you can use as signals that aren't links to help with relevance and search quality? Also, can you give any indication about such signals that you are already using?

Matt Cutts: I would certainly say that the links are the primary way that we look at things now in terms of reputation. The trouble with something like other ways of measuring reputation is that the data might be sparse. Imagine for example that you decided to look at all the people that are in various yellow page directories, across the web, for the list of their address, or stuff like that. The problem is, even a relatively savvy business with multiple locations might not think to list all their business addresses.

A lot of these signals that we look at to determine quality or to help to determine reputation can be noisy. I would convey Google's basic position as that we are open to any signals that could potentially improve quality. If someone walked up to me and said, the phase of the moon correlates very well with the site being high quality, I wouldn't rule it out, I wouldn't take it off the table, I would do the analysis and look at it.

Eric Enge: And, there would be SEOs out there trying to steer the course of the moon.

Matt Cutts: It's funny, because if you remember Webmaster World used to track updates on the Google Dance, and they had a chart, because it was roughly on a 30 day schedule. When a full moon came around people started to look for the Google Dance to happen.

In any event, the trouble is any potential signal could be sparse, or could be noisy, and so you have to be very careful about considering signal quality.

Eric Enge: Right. So, an example of a noisy signal might be the number of Gadgets installed from a particular site onto people's iGoogle homepage.

Matt Cutts: I could certainly imagine someone trying to spam that signal, creating a bunch of accounts, and then installing a bunch of their own Gadgets or something like that. I am sad to say you do have to step into that adversarial analysis phase where you say okay, how would someone abuse this anytime you are thinking about some new network signal.

Eric Enge: Or bounce rate is another thing that you could look at. For example, someone does a search and went to a site, and then they are almost immediately back at the Google search results page clicking on a different link, or doing a very similar search. You could use that as a signal potentially.

Matt Cutts: In theory. I don't think we typically don't confirm or deny whether we'd use any given particular signal. It is a tough problem, because something that works really well in one language might not work as well in another language.

Eric Enge: Right. One of the problems with bounce rate is that with the web moving so much more towards just give them answer now. For example, if you have a Gadget, you want the answer in the Gadget. If you use subscribed links, you want the answer in the subscribed links. When you get someone to your site, there is something to be said for giving them the answer they are looking for immediately, and they might see it and immediately leave (and you get the branding / relationship benefit of that.

In this case, it's actually a positive quality signal rather than a negative quality signal.

Matt Cutts: Right. You could take it even further and help people get the answer directly from a snippet on the search engine results page, and so they didn't click on the link at all. There are also a lot of weird corner cases, you have to consider anytime you are thinking about a new way to try to measure quality.

Eric Enge: Right, indeed. What about the toolbar data, and Google analytics data?

Matt Cutts: Well, I have made a promise that my Webspam team wouldn't go to the Google Analytics group and get their data and use it. Search quality or other parts of Google might use it, but certainly my group does not. I have talked before about how data from the Google toolbar could be pretty noisy as well. You can see an example of how noisy this is by installing Alexa. If you do, you see a definite skew towards Webmaster sites. I know that my site does not get as much traffic as many other sites, and it might register higher on Alexa because of this bias.

Eric Enge: Right. A site owner could start prompting people to install the Google toolbar whenever they come to their site.

Matt Cutts: Right. Are you sure you don't want to install a Google toolbar, Alexa, and why not throw in Compete and Quantcast? I am sure Webmasters are a little savvier about that, then the vast majority of sites. So, it's interesting to see that there is usually a Webmaster bias or SEO bias, with many of these usage based tools.

Eric Enge: Let's move on to hidden text. There are a lot of legitimate ways people can use hidden text, and, there are of course ways they can illegitimately use hidden text.

It strikes me that many of these kinds of hidden text are hard to tell apart. You can have someone who is using a simple CSS display:none scenario, and perhaps they are stuffing keywords, but maybe they do this with a certain amount of intelligence, making it much harder to detect then the site you recently wrote about. So, tell me about how you deal with these various forms of hidden text?

Matt Cutts: Sure. I don't know if you saw the blog post recently where somebody tried to layout many different ways to do hidden text, and ended up coming up with 14 different techniques. It was kind of a fun blog post, and I forwarded it to somebody and said "hey, how many do we check for"? There were at least a couple that wasn't strictly hidden text, but it was still an interesting post.

Certainly there are some cases where people do deceptive or abusive things with hidden text, and, those are the things that get our users most angry. If your web counter displays a single number, that's just a number, a single number. Probably, users aren't going to complain about that to Google, but if you have 4,000 stuffed words down at the bottom of the page that's clearly the sort of thing that if user realizes it's down at the bottom of the page, they get angry about it.

Interestingly enough they get angry about it whether it helped or not. I saw somebody do a blog post recently that had a complaint about six words of hidden text and how they showed up for the query "access panels". In fact, the hidden text didn't even include the word access panels, just a variant of that phrase.

Eric Enge: I am familiar with the post.

Matt Cutts: I thought it was funny that this person had gotten really offended at six words of hidden text, and complained about a query which had only one word out of the two. So, you do see a wide spectrum, where people really dislike a ton of hidden keyword stuff. They might not mind a number, but even with as little as six words, we do see complaints about that. So, our philosophy has tried to be not to find any false positives, but to try to detect stuff that would qualify as keyword stuffing, or gibberish, or stitching pages, or scraping, especially put together with hidden text.

We use a combination of algorithmic and manual things to find hidden text. I think Google is alone in notifying Webmasters about relatively small incidences of hidden text, because that is something where we'll try to drop an email to the Webmaster, and alert them in Webmaster Central. Typically, you'd get a relatively short-term penalty from Google, maybe 30 days for something like that. But, that can certainly go up over time, if you continue to leave the text on your page.

Eric Enge: Right. So, a 30 days penalty in this sort of situation, is that getting removed from the index, or just de-prioritizing their rankings?

Matt Cutts: Typically with hidden text, a regular person can look at it and instantly tell that it is hidden text. There are certainly great cases you could conjure up where that is not the case, but the vast majority of the time it's relatively obvious. So, for that it would typically be a removal for 30 days.

Then, if the site removes the hidden text or does a reconsideration request directly after that it could be shorter. But, if they continue to leave up that hidden text then that penalty could get longer.

We have to balance what we think is best for our users. We don't want to remove resources from our index longer than we need to it, especially if it's relatively high quality. But, at the same time we do want to have a clean index and protect the relevance of it.

Eric Enge: Right. Note that Accesspanels.net has removed the hidden text and they are still ranked no. 1 in Google for the query "access panels".

I checked this a few days ago, and the hidden text had been removed. The site has a "last updated" indicator at the bottom of the page, and it was just the day before I checked.

Matt Cutts: That's, we probably shouldn't get into too much detail about individual examples, but that one got our attention and is winding its way through the system.

Eric Enge: Right. When reporting web spam, writing a blog post in a very popular blog and getting a lot of peoples' attention to it is fairly effective. But, also Webmaster tools allows you to do submissions there, and it gets looked at pretty quickly, doesn't it?

Matt Cutts: It does. We try to be pretty careful about the submissions that we get to our spam report form. We've always been clear that the first and primary goal with those is to look at those to try to figure out how to improve our algorithmic quality. But, it is definitely the case that we look at many of those manually as well, and so you can imagine if you had a complaint about a popular site because it had hidden text, we would certainly check that out. For example, the incident we discussed just a minute ago, someone had checked it out earlier today and noticed the hidden text is already gone.

We probably won't bother to put a postmortem penalty on that one, but, it's definitely the case that we try to keep an open mind and look at spam reports, and reports across the web not just on big blogs, but also on small blogs.

We try to be pretty responsive and adapt relatively well. That particular incident was interesting, but I don't think that the text involved was actually affecting that query since it was different words.

Eric Enge: Right. Are there hidden text scenarios that are harder for you to discern whether or not they are spam versus something like showing just part of a site's terms and conditions on display, or dynamic menu structures? Are there any scenarios where it's really hard for you tell whether it is spam or not?

Matt Cutts: I think Google handles the vast majority of idioms like dynamic menus and things like that very well. In almost all of these cases you can construct interesting examples of hidden text. Hidden text, like many techniques, is on a spectrum. The vast majority of the time, you can look and you can instantly tell that it is malicious, or it's a huge amount of text, or it's not designed for the user. Typically we focus our efforts on the most important things that we consider to be a high priority. The keyword stuffed pages with a lot of hidden text, we definitely give more attention.

Eric Enge: Right.

Matt Cutts: So, we do view a lot of different spam or quality techniques as being on a spectrum. And, the best advice that I can give to your readers is probably to ask a friend to look at their site, it's easy to do a Ctrl+A, it's easy to check things with cascading style sheets off, and stick to the more common idioms, the best practices that lots of sites do rather than trying to do an extremely weird thing that could be misinterpreted even by a regular person as being spamming.

Eric Enge: Fair enough. There was a scenario that we reported a long while ago involving a site that was buying links, and none of those links were labeled.

There was a very broad pattern of it, but the one thing that we noticed and thought was a potential signal was that the links were segregated from the content, they were either in the right rail or the left rail, and the main content for the pages were in the middle. The links weren't integrated in the site, there was no labeling of them, but they were relevant. That's an example of a subtle signal, so, it must be challenging to think about how much to make out of that kind of a signal.

Matt Cutts: We spend everyday, all day, pretty much steeped in looking at high quality content and low quality content. I think our engineers and different people who are interested in web spam are relatively attuned to the things that are pretty natural and pretty organic. I think it's funny how you'll see a few people talking about how to fake being natural or fake being organic.

It's really not that hard to really be natural, and to really be organic, and sometimes the amount of creativity you put into trying to look natural could be much better used just by developing a great resource, or a great guide, or a great hook that gets people interested. That will attract completely natural links by itself, and those are always going to be much higher quality links, because they are really editorially chosen. Someone is really linking to you, because they think you've got a great site or really good content.

Eric Enge: I think you have a little bit of the Las Vegas gambling syndrome too. When someone discovers that they have something that appear to have worked, they want to do more, and then they want to do more, and then they want to do more, and its kind of hard to stop. Certainly you don't know where the line is, and there is only one way to find the line, which is to go over it.

Matt Cutts: Hopefully the guidelines that we give on the Webmaster guidelines are relatively common sense. I thought it was kind of funny that we responded to community feedback and recommended that people avoid excessive reciprocal links, of course, some of these do happen naturally, but, people started to worry and wonder about what the definition of excessive was. I thought it was kind of funny, because within one response or two responses people were like saying "if you are using a ton of automated scripts to send out spam emails that strikes me as excessive.

People pretty reasonably and pretty quickly came to a fairly good definition of what is excessive and that's the sort of thing where we try to give general guidance so that people can use their own common sense. Sometime people help other people to know where roughly those lines are, so they don't have to worry about getting to close to them.

Eric Enge: The last question is a link related question. You can get a thunderstorm of links in different ways. You get on the front page of Digg, or you can be written up in the New York Times, and suddenly a whole bunch of links pour into your site. There are patterns help by Google that talk about temporal analysis, for example, if you are acquiring links at a certain rate, and suddenly it changes to a very high rate.

That could be a spam signal, right. Correspondently, if you are growing at a high rate, and then that rate drops off significantly, that could be a poor quality signal. So, if you are a site owner and one of these things happens to you, do you need to be concerned about how that will be interpreted?

Matt Cutts: I would tell the average site owner not to worry, because in the same way that you spend all day thinking about links, and pages, and what's natural and what's not, it's very common for a few things to get on the front page of Digg. It happens dozens of times a day; and so getting there might be a really unique thing for your website, but it happens around the web all the time. And so, a good search engine to needs to be able to distinguish the different types of linking patterns, not just by real content, but breaking news and things like that.

I think we do a pretty good job of distinguishing between real links and links that are maybe a little more artificial, and we are going to continue to work on improving that. We'll just keep working to get even smarter about how we process links we see going forward in time.

Eric Enge: You might have a very aggressive guy that is going out there and he knows how to work to Digg system, and he is getting on the front page of Digg every week or so. They would end up with a very large link growth over a short period of time, and that's what some of the pundits would advise you to do.

Matt Cutts: That's an interesting case. I think at least with that situation you've still got to have something that's compelling enough that it gets people interested in it somehow.

Eric Enge: You have appealed to some audience.

Matt Cutts: Yeah. Whether it's a Digg technology crowd or a Techmeme crowd, or Reddit crowd, I think different things appeal to different demographics. It was interesting that at Search Engine Strategies in San Jose you saw Greg Boser pick on the viral link builder approach. But, I think one distinguishing factor is that with a viral link campaign, you still have to go viral. You can't guarantee that something will go viral, so the links you get with these campaigns have some editorial component to them, which is what we are looking for.

Eric Enge: People have to respond at some level or you are not going to get anywhere.

Matt Cutts: Right. I think it's interesting that it's relatively rare these days for people to do a query and find completely off topic spam. It absolutely can still happen, and if you go looking for it you can find off topic spam, but it's not a problem that most people have on a day-to-day basis these days. Over time, in Web spam, we start to think more about bias and skew and how to rank things that are on topic appropriately. Just like we used to think about how we return the most relevant pages instead of off topic stuff.

The fun thing about working at Google is, the challenges are always changing and you come in to work and there are always new and interesting situations to play with. So, I think we will keep working on trying to improve search quality and improve how we handle links, and handle reputation, and in turn we are trying to work with Webmasters who want to return the best content and try to make great sites so they can be successful

SEO SUBMISSION

SEO, or search engine optimization, is made of a variety of tasks including article marketing, keyword placement & link building. As an internet business owner you are no doubt already aware of importance of SEO to maintaining & increasing your business. However & yes re is always more, re may be anor option that you have yet to try that may be of great use to you both for increasing business growth but also freeing up some of your valuable time. That is Directory Submission or Directory Submission Services.

SEO submissions are part of optimization activities you need to do regularly to make sure you stay on top of affiliate marketing game. web is updated continuously & new content changes lives daily. Okay, I exaggerate. What it changes are page ranks & search results – but to an affiliate marketer, that’s as good as life itself. How do you keep up with rest of pack who are looking for more innovative ways to get ahead in race to first page of Google searches? Think SEO submissions.

se SEO submissions include many things like Directory Submission Service, Social Bookmaking Service; Squidoo & Hub pages Lens/Hub Creation, Article Marketing Service, Content Writing Service, Search Engine Indexing Service & Blog Carnival Service. In addition to Google, MSN, Yahoo & or major search engines, re are many or directories that will accept URL submissions for free listings. You also need to put your website in those directories to exp& your market base. All 50 online directories list URLs in broad categories & ir indexes are linked with or websites as well. That means listing with m increases your site’s online visibility via associated traffic.

Usually submitting all your sub pages (one page each day) is fastest way of getting your whole site indexed. However, some search engines might rank your Web pages higher if y are not directly submitted, but found by search engine spider. Try linking to all your sub-pages from main page, submitting main page only & waiting 2 – 3 months. n check that pages are listed, & submit only pages not yet found by spider.

Monday, April 26, 2010

seo ranking – Page Rank

Internal linking is one of most important Onsite SEO elements. Proper internal linking ensures that web pages are accessible to search engines & that y are indexed properly. Google allocates a value to every page of your site. This is PageRank. Your homepage is seen as most important page of your site & refore Homepage will always have highest PageRank value. This PageRank is n distributed between lower pages of your site. links that point from your home page to internal pages are usually found in Main navigation of site. This allows your Homepage PageRank to flow naturally to internal pages that are being linked to. PR gets distributed evenly across pages that are being linked to. A good internal linking structure should be intuitive as well as functionality driven. This will boost ir ratings as well.

Though PageRank is given based on which page links to you, it is possible that an internal page from your website with more & better back links might get a higher PR than homepage. In fact, you could use this to your advantage. You could link your best articles or best optimized pages from internal page with more PR & share PR value with m. If you think a particular page is odd enough n you may avoid placing that page as a home page.

It is best way to link all pages of a website with its corresponding homepage. This will relieve you from facing any PageRank loss. It is always advised to h&le rel=”nofollow” attribute in links, when you want to block a particular page to be linked to homepage. This will avoid a PageRank loss.

SEO Technologies is an expert in Search Engine Optimization. We improve your Websites to achieve highest PageRank through Directory Submission, Article Submission, Blog Posting, etc with an affordable price. We help in increasing traffic to your websites through Social Bookmarking, Social Networking like Twitter, Facebook, etc. For more information contact us

Tuesday, April 20, 2010

SEO META TAGS

seo meta tags

keyword META tag is still not used by search engines but when submitting to directories, a valuable use of time by way, META keywords are used in many cases to automatically fill in keywords for your site in that directory. META description tag has soared in importance. How so you ask? Google has started showing META description in its search results when certain criteria are met. criteria being used appears to be very simple, unlike many of or things Google does. When keyword or search terms appear in META description, META description is used instead of snippet of text containing keyword on your page.

At one point Meta tags were considered a relatively important factor by search engines. AltaVista & or older search engines used m to help determine a site’s me as well as relevance to a given term. This is no longer case; search engines now rely on much more advanced techniques to do this. That said, Meta tags still have some life left in m; y are sometimes used as snippet of a site’s content in search engine result pages by Google, & possibly or search engines. It’s also possible y still have a little influence with some search engines in determining a site’s content.

SEO Meta tags can also be used to make your website suitable for all user age groups, particularly children. By addition of a PICS (platform for Internet Content Selection) label to website, you can get your website rated. Tags can also control browser, robots & internal categorization of your website. Internal categories are required where you have multiple authors & contributors to your site contents. tags useful here are related to author’s name & last update of content. With robot tag you can permit indexing by robots. Lastly you can use a tag analyzer to assess tags you have used.

SEO TECHNIQUES

SEO is art of getting traffic to your site by optimizing on page content & off page links to your site. re are 3 rules that you must follow to make your site a success. Only target keywords that are receiving traffic, Only target keywords that have a commercial value, Target keywords with a low enough competition. re are many methods of installing good SEO Techniques, but be aware that re are bad techniques that should not be used & avoided at all cost.

Search Engine Optimization must be focused towards Human visitors in order to achieve good quality traffic & conversion rates. Page Content should be specific, informative & relevant to a search query. Writing relevant, quality content is one of most important factors or SEO Techniques, which will unlock doors of your website to real visitors. Anor technique to be followed while making use of enhanced SEO services is to install a sitemap. A well crafted sitemap would help users to navigate easily within your website. Moreover, search engines can easily track content of site through links in a sitemap.

A site map is an absolute must for those wishing to maximize ir website’s SEO potential. Why is this? It is because site map allows robots to index deep into subpages of website which means complete totality of site will be counted towards SEO impact. Content is king when it comes to search engine optimization. days of sparse text & tons of pictures are long gone when it comes to boosting SEO potential. Websites need solid text content that is loaded with proper keyword selections in order to succeed. Never lose site of this when developing SEO plans.

We SEO Technologies can also manage all content for you. Write articles, blogs, site content preparation, website promotion, Utilize Twitter & Facebook to continue building reputation & customers. Twitter is just going to grow & grow, would be mad not to start using it properly right now. This can be automated to an extent.contact us to get a fabulous services

Saturday, April 10, 2010

Link Building

Few St&ard Offers:

o Contextual / In-Content Link Building: What work better than One Way Links? Probably your answer will be Nothing. & what works better than In-Content One Way Links? Absolutely Nothing. You are very much right. We are providing Quality One Way Link Building Service for last 6 months & doing great. We are offering Contextual / In-Content Link Building & Bottom Link Building both. Just have a look.

Contextual Link Building Service in Detail.

o One Way Link Building: As I said one way links works best, so we do offer anor kind of one way link building where your investment is low. If your budget don't allow to get our Contextual link building service n this is best offer for you. Have a look in detail.

Reciprocal Link Building Service in Detail.

o Regional Directory Submissions: We said we are mature in directory submissions as directory submissions is our oldest service we are offering. We go step ahead & start doing Non-English Directory Submission Service. We already done submissions for minimum 126 web sites & have a long list of happy customers. Currently we are offering French, Spanish, German, Italian, Dutch & UK language directory submissions. In future we are planning to include more languages. Worth having a look.

Regional Directory Submissions Service in Detail.

o Social Bookmark Submissions: Latest Trend in industry. We are proud to say that we were VERY FIRST PERSON to offer Social Bookmark Submissions as service over Internet. We started this service on 23rd Dec, 2006. This offer will help you to get over 95 back links if you order 100 submissions. Sounds Great? YES, this is great offer. Have a close look.

Social Bookmark Submission Service in Detail.

o Directory Submissions: One of oldest & best link building method. We should say directory submission is MUST MUST thing for website promotion. When site is ready directory submission is very thing recommended. Our Oldest service on internet is Directory Submission. We are very very professional & mature here.

Directory Submission Service in Detail.

o Article Submissions: Like directory submissions, article submissions is also great & old method of getting back links. Article Submissions help you to get back links from within content. YES, it simply means quality back links. We are doing article writing & submissions for our clients for more than 3 years. Please have a close look, you'll find it great offer for sure.

Article Submission Service in Detail.

o Press Release & RSS Submissions: If your company / business news publish in Google or Yahoo news n wouldn't it be great?. It will be great because this can bring lot of popularity & traffic to your site. Simply this can make your site famous in one day. All this is wonder of Press Release Submissions. RSS submissions will make your topics publish on RSS directories & link back to your site. Wow, What a great thing. Have a look on our 0ffer.

Press Release & RSS Submissions Service in Detail.

o Blogs / Forums Comment Posting: Blogs & Forums are known for places to get links but also targeted traffic. We are expert in doing blog comment & forum post making which not only bring some traffice but also make links with your keyword as anchor text.

Blogs / Forums Comment Posting Service in Detail.

o Content / Copyright Writing: If you site don't have unique content & quality content, n we are here to help you. We not only write quality content for websites but also write ebooks, tutorials, article, press release & l&ing page content. We have separate team for this job.

Content / Copyright Writing Service in Detail.

o Guaranteed Links Offers: No need to say, what we mean here. We are able to give you guaranteed, permanent, quality, one-way back links from hundreds of directories & blogs. Cheapest & most effective method to increase back links for your website. You can't miss this opportunity.

Guaranteed Links Service in Detail.

o Custom Internet Marketing: If your need does not fits any above options & you require bigger or different Internet Marketing Service n please Contact US Or Ask for a FREE Quote. We'll get back to you within 24 hours.

Forum signature linking

Forum signature linking is a technique used to build backlinks to a website. This is process of using forum communities that allow outbound hyperlinks in ir member's signature. This can be a fast method to build up inbound links to a website; it can also produce some targeted traffic if website is relevant to forum topic. It should be stated that forums using nofollow attribute will have no actual Search Engine Optimization value.

Building Links Through DoFollow Forum Signatures

I haven’t written a link building post in a while, because for most part, I was off investigating or things. & getting way too complex. When I realized it’s things I think are simple & run-of--mill that people really are looking to learn about. So as people ask me questions, I note m & try to write a post. I used to hate questions. Taking someone from “What’s a signature?” to viewing browser source in one sitting may be hard, but hopefully, it’s not impossible. Now I realize se questions are master key to type of traffic I want at this blog. So off we go.

Forums are basically where “social networks” started.

I talk to a lot of people who want to get traffic to ir site. & y usually think I can tell m how in 10-15 minutes. So I try. Rarely do people listen. & out of those that listen, very few actually use what I tell m. I am not sure why this happens. Maybe y are lazy. Maybe y think, “How can something like that help me?”. But one guy I talked to I told to use forum signatures to build links. That was about all. I thought it was simple enough to get someone started. I told him to sign up for forums, add a signature file, forget about it & just converse. Put in your two cents where ever you can add something to conversation. I did not list specific forums.

I told him that & I was thinking, yet anor person not taking my advice. But I was wrong. A few weeks later, he came back to me & we checked site. He has a micro business & is outranking local corporations here in Kansas City. He is doing this with a site built from templates. He doesn’t even know HTML. & what he used to do it, local business directories & forums. It blew my mind. One, for getting through to someone in 10 minutes. Two, for results.

& since most people underst& forums, I think it may be best place for average non-tech person to build links to his site, especially if you have gift of gab. y have been around since y were called bulletin boards & some still are. You can find multiple forums on just about any topic you want.

Link bait

Link bait is any content or feature within a website that somehow baits viewers to place links to it from or websites. Matt Cutts defines link bait as anything "interesting enough to catch people's attention." Link bait can be an extremely powerful form of marketing as it is viral in nature.

Link bait in search engine optimization

quantity & quality of inbound links are two of many metrics used by a search engine ranking algorithm to rank a website. Link bait creation falls under task of link building, & aims to increase quantity of high-quality, relevant links to a website. Part of successful linkbaiting is devising a mini-PR campaign around release of a link bait article so that bloggers & social media users are made aware & can help promote piece in t&em. Social media traffic can generate a substantial amount of links to a single web page. Sustainable link bait is rooted in quality content.

Many type of link bait

Although re are no clear-cut subdivisions within link bait, many[who?] attempt to divide m into types of hooks. This is a short list of some of most common approaches with brief descriptions:

* Informational hooks - Provide information that a reader may find very useful. Some rare tips & tricks or any personal experience through which readers can benefit.

* News hooks - Provide fresh information & obtain citations & links as news spreads.

* Humor hooks - Tell a funny story or a joke. A bizarre picture of your subject or mocking cartoons can also prove to be link bait.

* Evil hooks - Saying something unpopular or mean may also yield a lot of attention. Writing about something that is not appealing about a product or a popular blogger. Provide strong reasons for it.

* Tool hooks - Create some sort of tool that is useful enough that people link to it.

* Widgets hooks - A badge or tool, that can be placed or embedded on or websites, with a link included.

* Unique content hooks - This hook is intended for people that are in need of unique contents or articles for traffic or AdSense revenue. This became popular after Google implemented Duplicate Contents Filter & sites with duplicate contents saw fall in traffic. To use this hook, you have to create unique contents & give it out to Bloggers & webmasters with an obligation to put link back to your site.

Blog comments

Blog comments

Leaving a comment on a blog can result in a relevant do follow link to individual's website. Most of time however leaving a comment on a blog turns into a no follow link, which is almost useless in eyes of search engines such as Google &Yahoo. On or hand, most blog comments get clicked on by readers of blog if comment is well thought out &pertains to discussion of or commenters & post on blog.

Spam in blogs (also called simply blog spam or comment spam; note blogspam has anor, more common meaning, namely post of a blogger who creates no-value added posts to submit m to or sites) is a form of spamdexing. It is done by automatically posting random comments or promoting commercial services to blogs, wikis, guestbooks, or or publicly accessible online discussion boards. Any web application that accepts &displays hyperlinks submitted by visitors may be a target.

Adding links that point to spammer's web site artificially increases site's search engine ranking. An increased ranking often results in spammer's commercial site being listed ahead of or sites for certain searches, increasing number of potential visitors &paying customers.

Friday, April 2, 2010

Dofollow Backlinks Are The Key to Optimizing Your Search Engine Rank

The First Tip To Increasing Your Page Rank

To find good key words use Google's get keyword ideas tool in adwords section of ir site. To find a good keyword using this tool first look at number of times each word is searched in a month. If it is higher than 100 than take that word & google it with "quotation" marks around it. If you get back less n 100,000 results Google it again without quotes. Take each site from first page & check its page rank. I use this Fire Fox add on to check page rank. Add up each pages rank to find out average. Don't count article sites, social sites, web 2.0 site as y carry ir own weight & you can use m too.What is the Difference Detween Nofollow and Dofollow Backlinks

First I'll give a brief explanation of how & why backlinks help you get traffic . Search engines use number of links to a website to determine it's rank & popularity. Google is only one that uses nofollow & dofollow tags. Dofollow means that Google's bots will follow link & it will add juice to your site rank. re are two different types of nofollow links. One will tell bots not to follow it at all or will tell bots to follow but not to add any weight to your rank. This doesn't mean you should shy away from nofollow links completely as you can still get traffic from nofollow links. If that traffic stays on your page long enough to read it & or click a link n that can help your rank. I have compiled a list of sites I use to add backlinks to my sites. se are mostly social bookmarking sites. Some will add backlinks automatically for you.Start Blogs and Write Articles

After you have 10 to 20 keywords you have to figure out how you are going to use m. First start a few blogs using a keyword in title. For each post you make use a different keyword in title, this is called an anchor text. Add a link to your site in each post. Your link should be a hyperlink of a keyword such as dofollow backlinks add link juice, this would be called a long tale keyword as it uses several words toger, this is a good way to focus in on a micro niche. Don't just hyperlink "click here", search engines will use hyperlink words as a tag. Don't make it too commercial or self promoting as this will sound like spam. Make it interesting & people will read more & be more likely to click your link. Search engines monitor time people spend on your blog & will rank it high if you get a good percentage of people staying on it for a few minutes. This means it must be a quality site. higher ranking your blog more weight link to your site will have. You'll want to add backlinks to your blog as well. This will increase your blogs rank.Next write a few ezine articles. Write one of se a week with a link to your site. Again make it high quality & not too spammy. What you are trying to do is create a bunch of original content by you & having it all linking back to your site. Eventually people interested in your topic will recognize that you have a lot of knowledge on your subject & think of you as an expert. Anor key component is having all of se blogs & articles be unique. Search engines can tell if same article or blog post is duplicated over & over again & will bury posts & backlinks from m.

This Is How I Get Serious Link Juice

This site uses all of techniques I discuss in this lense to increase your page rank. It takes a few weeks to notice a big change so y give you a one month trial membership. Up until three weeks after I started using Link Juicer I was only getting a few visitors a week to this lense. Now I'm getting a few a day & my rank keeps going up. I hope to soon be getting some major traffic.More Tips to Increasing Your Page Rank

Posting comments sounds easy but it is time consuming & again you don't want to spam your comments. You want to search for blogs, forums & Squidoo lenses related to your topic. When you post a comment make sure what you say is relevant & adds to discussion. Don't just say a few words & add your link, that is considered spam. It is best to try to be first to reply this will ensure more people see your link & click on it. If comments are dofollow it doesn't matter as much if you are first to post. A lot of sites have re comments nofollow but you can still get traffic from posting to se. Make a few posts a day to each of blogs & forums you can find. If you are posting to a blog some allow use of BBC code. Put this in your signature to automatically add your link each time you post. It looks like this. [b][URL"http://www.yoursite.com/"]Your Signature Text[/URL][/b]. [b] means to bold text.Here Is This Lenses Rank And Traffic

As you see I didn't get much traffic in beginning. first spike for A. was when I sent my first e-mail through Money List Mania. next 5 spikes were after each subsequent mailing. little spikes following were from List Machine. Spike B. Is where I made 3 posts to a forum. trick re is to find a relevant topic where somebody asks a question you can answer. Find one where re are only a couple of replies & answer question & leave a link for more information. This way you won't be spamming.

For rank, each time I updated lense it would go up. Point C. Is after I started sending e-mails & getting traffic. Your rank will increase if people stay on your lense long enough to read it & if y click on any of your links. Point D. is where I started using Link Juicer. As you can see it helped my rank to start increasing steadily.

Saturday, March 27, 2010

DoFollow

DoFollow is simply an internet slang term given to web pages or sites that are not utilizing NoFollow. NoFollow is a hyperlink inclusion that tells search engines not to pass on any credibility or influence to an outbound link. Originally created to help blogging community reduce number of inserted links into a "comment" area of a blog page, attribute is typically st&ard in blog comments. It helps overwhelmed webmasters disallow spammers from gaining any kind of advantage by inserting an unwanted link on a popular page.

DoFollow is simply an internet slang term given to web pages or sites that are not utilizing NoFollow. NoFollow is a hyperlink inclusion that tells search engines not to pass on any credibility or influence to an outbound link. Originally created to help blogging community reduce number of inserted links into a "comment" area of a blog page, attribute is typically st&ard in blog comments. It helps overwhelmed webmasters disallow spammers from gaining any kind of advantage by inserting an unwanted link on a popular page.As a result of implementation of NoFollow: link building has taken a massive hit, as many sites including Wikis, social bookmarking sites, corporate & private blogs, commenting plugins & many or venues & applets across internet began implementing NoFollow. This made effective link building difficult for both honest people & spammers alike.

By way, re's nothing wrong with getting NoFollow links. In fact, you'll want to get an equal amount of m as well. While y don't pass on link juice, y do help associate your site with anchor text ( keyword phrase that makes up URL pointing to your site).

How Search Engines Use NoFollow

Search engines each interpret NoFollow in ir own way. Here's a list of major search engines, & ir actions when a NoFollow link is seen:

* Google follows NoFollow links but simply does not pass on credit to an outbound link that is tagged with attribute.

* Yahoo follows NoFollow links & excludes link from all ranking calculations.

* MSN may or may not follow a NoFollow link, but it does exclude it from ranking calculations.

* Ask.com does not adhere to NoFollow.

Finding DoFollow Sites

With all of above being said - it is rar difficult to find sites that are "DoFollow"...however, y are out re! Listed below for your convenience are running lists of multiple categories of sites that are DoFollow, & great c&idates for your next backlink!

Don't Be A Spammer - Be A Value Provider!

Spamming Dofollow resources will not give you results...not only that, it hurts entire community, & it persuades Dofollow providers to convert ir sites to Nofollow. Be sure that all of your link-building exploits are done so tastefully & honestly. If you don't cram keywords & give an honest account of what your site is about, search engines will reward you for it!

In getting links, regardless of if y're DoFollow or not, please note that Google & or search engines are rar smart se days. If you have multiple links pointing to a page from one DoFollow source, or if that source is also linking to or places that are giving you backlinks (such as your Blogspot or Wordpress account), search engines will notice & may devalue your links to point where y become worthless. Build links naturally & over time. Provide value & y'll be quality links. Orwise, you'll hurt your website's link building efforts in long-run.

By way -- when it comes to link building, only first link pointing to a site counts. Posting more than one hyperlink on same page to same source won't help in any way.

How to Spot DoFollow Links

...& NoFollow ones, too

If you use Firefox, a quick way to find DoFollow links is to spot NoFollow ones! A free plugin called SearchStatus does just that: it will highlight all "NoFollow" links on a page.

Everything not highlighted is DoFollow. It's a quick & easy way to do a background check on your backlinking resources to see if y've retained DoFollow status!

Table of Contents

Below are following do follow blog service.

1) List of DoFollow Blog Services

2) List of DoFollow Social Networking Sites

3) List of DoFollow Community-Ranked Article Submission Sites

4) List of DoFollow Article Submission Sites

5) List of DoFollow Social Portal Sites

6) List of DoFollow Social Bookmarking Sites

7) List of DoFollow Wikis

List of DoFollow Blog Services

What to do with them?: Create a free blog. Write a keyword-rich (but not spammy) article promoting your site, with strategic backlinks. Name the blog after an important keyword, like silver-dog-collars.blogger.com. Create new posts and promote your site's individual categories & pages, using strategic keywords tailored for each individual post and the page it's linking to.

1 Wordpress.com2 Blogspot

3 Tumblr

4 LiveJournal

5 Blogetery

6 Blogsome

7 ClearBlogs

8 Easyjournal

9 Blogster

10 BlogoWogo

11 Aeonity

12 Thoughts.com

List of DoFollow Social Networking Sites

What to do with them?: Add your sites and socially promote them by complementing others, asking them to take a look at your site. Politely leave your link in their comment area (at your own discretion, this is typcially seen as spam). These sites involve the most time out of your daily schedule.

Below are following Social Networking Sites

1 RateItAll.com

2 Tweako

3 CREAMaid

4 Yedda

5 Xanga

6 BUMPzee! (requires RSS feed)

List of DoFollow Community-Ranked Article Submission Sites

Thursday, March 18, 2010

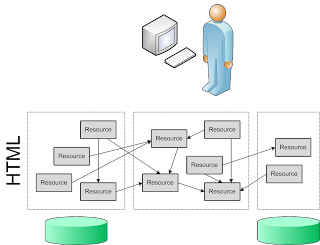

Resource Linking

In recent years resource links have grown in importance because most major search engines have made it plain that -- in Google's words -- "quantity, quality, &relevance of links count towards your rating."

The engines' insistence on resource links being relevant &beneficial developed because many of methods described elsewhere in this article -- free-for-all linking, link doping, incestuous linking, overlinking, multi-way linking -- &similar schemes were employed solely to "spam" search-engines, i.e. to "fool" engines' algorithms into awarding sites employing se unethical devices undeservedly high page ranks and/or return positions.

Despite cautioning site developers (again quoting from Google) to avoid "'free-for-all' links, link popularity schemes, or submitting your site to thousands of search engines (because) se are typically useless exercises that don't affect your ranking in results of major search engines -- at least, not in a way you would likely consider to be positive," most major engines have deployed technology designed to "red flag" &potentially penalize sites employing such practices.

Reciprocal link

[edit] Relevant linking

Relevant linking is a derivative of reciprocal linking or link exchanging in which a site linked to anor site contains only content compatible & relevant to linked site.

[edit] Three way linking

See also: Webring

Three way linking (siteA ⇒ siteB ⇒ siteC ⇒ siteA) is a special type of reciprocal linking. The attempt of this link building method is to create more "natural" links in eyes of search engines. The value of links by three-way linking can n be better than normal reciprocal links, which are usually done between two domains.

[edit] Two-Way-Linking (Link exchange)

An alternative to questionable automated linking practices referenced above are free, community-based link exchange forums such as LinkPartners.com, in which members list ir sites according to sites' business category & invite webmasters of or compatible sites to request a link exchange. Such forums were created to foster ethical exchange of links between sites dealing with similar, relevant subject matter in order to offer visitors broader access to information than that obtainable on eir site individually. Unlike automatic linking schemes, se forums require members to editorially review sites requesting a link & make human-intelligence-based decisions on wher establishing a link with m would benefit end users & comply with search-engine quality & best practices guidelines.

Wednesday, March 10, 2010

Black Hat Technology

* breaks search engine rules & regulations

* creates a poor user experience directly because of black hat SEO techniques utilized on Web site

* unethically presents content in a different visual or non-visual way to search engine spiders & search engine users.

A lot of what is known as black hat SEO actually used to be legit, but some folks went a bit overboard & now se techniques are frowned upon by general SEO community at large. se black hat SEO practices will actually provide short-term gains in terms of rankings, but if you are discovered utilizing se spammy techniques on your Web site, you run risk of being penalized by search engines. Black hat SEO basically is a short-sighted solution to a long-term problem, which is creating a Web site that provides both a great user experience & all that goes with that.

Black Hat SEO Techniques To Avoid

* Keyword stuffing: Packing long lists of keywords & nothing else onto your site will get you penalized eventually by search engines. Learn how to find & place keywords & phrases right way on your Web site with my article titled Learn Where & How To Put Keywords In Your Site Pages.

* Invisible text: This is putting lists of keywords in white text on a white background in hopes of attracting more search engine spiders. Again, not a good way to attract searchers or search engine crawlers.

* Doorway Pages: A doorway page is basically a “fake” page that user will never see. It is purely for search engine spiders, & attempts to trick m into indexing site higher. Read more about doorway pages.

Black Hat SEO is tempting; after all, se tricks actually do work, temporarily. y do end up getting sites higher search rankings; that is, until se same sites get banned for using unethical practices. It’s just not worth risk. Use efficient search engine optimization techniques to get your site ranked higher, & stay away from anything that even looks like Black Hat SEO. Here's a few articles that can get you on road to knowing search engine optimization:

* Ten Search Engine Optimization Myths - Debunked!: If you're just getting started in search engine optimization for your Web site, you might (unfortunately) have been subjected to a few SEO whoppers. Before you start throwing away your time & money on search engine optimization wild goose chases, read se ten myths of search engine optimization.

* Top Ten Tips to Top of Search Engines: Jill Whalen is a recognized industry expert in search engine optimization. Here are her Top Ten Tips to get your site to top of search engines.

* Ten Steps to a Well-Optimized Site: Want increased website traffic, higher search engine ranking, & increased customer satisfaction? Read se Ten Steps to a Well-Optimized Site & you'll be well on your way to accomplishing se goals.